Hadoop

Download JAVA from

http://www.oracle.com/technetwork/java/javase/downloads/index-jsp-138363.html

Download HADOOP from

http://archive.apache.org/dist/hadoop/core/

copy both java and hadoop to /usr/local

$ sudo bash

password:

# mv jdk1.7.0_71 /usr/local/java

# exit

#mv hadoop-2.7.2 /usr/local/ hadoop

Change the ownership

sudo chown -R hadoop:hadoop hadoop

sudo chown -R hadoop:hadoop java

setting java and haoop path

~$gedit ~/.bashrc

JAVA_HOME=/usr/local/java

HADOOP_HOME=/usr/local/hadoop

HADOOP_CLASSPATH=/usr/local/java/lib/tools.jar

PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/sbin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_HOME

export HADOOP_CLASSPATH

export JAVA_HOME

export PATH

Installing Software

For example on Ubuntu Linux:

$ sudo apt-get install ssh $ sudo apt-get install rsync

The following are the list of files that you have to edit to configure Hadoop.

Fle location: /usr/local/hadoop/etc/hadoop

Hadoop-env.sh

change the following lines

# The java implementation to use.

export JAVA_HOME=/usr/local/java

core-site.xml

The core-site.xml file contains information such as the port number used for Hadoop instance,memory allocated for the file system, memory limit for storing the data, and size of Read/Write buffers.

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

hdfs-site.xml

The hdfs-site.xml file contains information such as the value of replication data, namenode path,and datanode paths of your local file systems. It means the place where you want to store the Hadoop infrastructure

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/<username>/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/<username>/hdfs/datanode</value>

</property>

</configuration>

create folder in the specified path /home/<username>/hdfs/namenode

and /home/<username>/hdfs/datanode

$mkdir -p /home/<username>/hdfs/namenode

yarn-site.xml

This file is used to configure yarn into Hadoop. Open the yarn-site.xml file and add the following

properties in between the <configuration>, </configuration> tags in this file.

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

mapred-site.xml

This file is used to specify which MapReduce framework we are using. By default, Hadoopcontains a template of yarn-site.xml.

First of all, it is required to copy the file from mapred-site,xml.template to mapred-site.xml file using the following command.

$ cp mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

setup passphraseless ssh

Now check that you can ssh to the localhost without a passphrase:$ ssh localhost

If you cannot ssh to localhost without a passphrase, execute the following commands:

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ chmod 0600 ~/.ssh/authorized_keys

Verifying Hadoop Installation

Step 1: Name Node Setup$ cd ~

$ hdfs namenode -format

Step 2: Verifying Hadoop dfs

$ start-dfs.sh

Step 3: Verifying Yarn Script

$ start-yarn.sh

Step 4: Accessing Hadoop on Browser

http://localhost:50070/

In Hadoop 2.x, web UI port is 50070 but in Hadoop3.x, it is moved to 9870. You can access HDFS web UI from localhost:9870 as shown in the below screenshot

http://localhost:9870/

Step 5: Verify All Applications for Cluster

http://localhost:8088/

To check running services

$jps

Remote Procedure Call (RPC) is a protocol that one program can use to request a service from a program located in another computer in a network without having to understand network details.

WEB which is denoted in the table is the WEB port number.

| Hadoop Daemons | RPC Port | WEB – UI |

| NameNode | 9000 | 50070 (Hadoop 2.x) 9870(Hadoop 3.x) |

| SecondaryNameNode | 50090 | |

| DataNode | 50010 | 50075 |

| Resource Manager | 8030 | 8088 |

| Node Manager | 8040 | 8042 |

checking

Make the HDFS directories required to execute MapReduce jobs:$ bin/hdfs dfs -mkdir /user

$ bin/hdfs dfs -mkdir /user/<username>

Copy the input files into the distributed filesystem:

$ bin/hdfs dfs -put etc/hadoop input

Run some of the examples provided:

$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar grep input output

'dfs[a-z.]+'

Examine the output files: Copy the output files from the distributed filesystem to the local

filesystem and examine them:

$ bin/hdfs dfs -get output output

$ cat output/*

View the output files on the distributed filesystem:

$ bin/hdfs dfs -cat output/*

map reduce word count program

import java.io.IOException;import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

Usage

Assuming environment variables are set as follows:export JAVA_HOME=/usr/java/default

export PATH=${JAVA_HOME}/bin:${PATH}

export HADOOP_CLASSPATH=${JAVA_HOME}/lib/tools.jar

Compile WordCount.java and create a jar:

$ bin/hadoop com.sun.tools.javac.Main WordCount.java

$ jar cf wc.jar WordCount*.class

Assuming that:

/user/joe/wordcount/input - input directory in HDFS

/user/joe/wordcount/output - output directory in HDFS

Sample text-files as input:

$ bin/hadoop fs -ls /user/joe/wordcount/input/ /user/joe/wordcount/input/file01

/user/joe/wordcount/input/file02

$ bin/hadoop fs -cat /user/joe/wordcount/input/file01

Hello World Bye World

$ bin/hadoop fs -cat /user/joe/wordcount/input/file02

Hello Hadoop Goodbye Hadoop

Run the application:

$ bin/hadoop jar wc.jar WordCount /user/joe/wordcount/input /user/joe/wordcount/output

Output:

$ bin/hadoop fs -cat /user/joe/wordcount/output/part-r-00000`

Bye 1

Goodbye 1

Hadoop 2

Hello 2

World 2`

Hadoop commands

remove directory

hadoop fs -rm -rf /user/the/path/to/your/dir

common errors

error1:ls: Call From puppet/127.0.1.1 to localhost:9000 failed on connection exception:

java.net.ConnectException: Connection refused; For more details see:

http://wiki.apache.org/hadoop/ConnectionRefused

solution:check services start

$jps

$start-dfs.sh

$start-yarn-sh

error2

labadmin@puppet:~$ hadoop fs -ls

ls: `.': No such file or directory

solution:$hadoop fs -ls ~/

---->specify directories.

Error3 :

$hadoop jar ~/wc.jar ~/test ~/output

Exception in thread "main" java.lang.ClassNotFoundException: /home/labadmin/test

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.util.RunJar.run(RunJar.java:214)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

solution:

$ hadoop jar ~/wc.jar WordCount ~/test ~/output ---->check the main method class in the java file.

Error4:

~$ hadoop jar ~/wc.jar WordCount ~/file2 ~/output

16/04/29 11:41:00 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

Exception in thread "main" org.apache.hadoop.mapred.FileAlreadyExistsException: Output

directory hdfs://localhost:9000/home/labadmin/output already exists

at

org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFo

rmat.java:146)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:266)

at

org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:139)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1290)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1287)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at

org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1287)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1308)

at WordCount.main(WordCount.java:59)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)Solution:

Check the <filename>file2 in the hdfs file system.

$hadoop fs -ls ~/

drwxr-xr-x - labadmin supergroup

drwxr-xr-x - labadmin supergroup

drwxr-xr-x - labadmin supergroup

if not copy files from local to hdfs

$hadoop fs -put ~/file2 ~/

hadoop help

http://hadoop.apache.org/

- Hadoop Master: 10.0.0.1

(hadoop-master)

- Hadoop Slave: 10.0.0.2

(hadoop-slave1)

- Hadoop Slave: 10.0.0.3

(hadoop-slave2)

Configuring Key Based

Login

# su hadoop

$ ssh-keygen -t rsa

$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@hadoop-master

$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@hadoop-slave1

$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@hadoop-slave-2

$ chmod 0600 ~/.ssh/authorized_keys

$ exit

core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop-master:9000/</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.data.dir</name>

<value>>/home/hadoop/hdfs/namenode</value>

<final>true</final>

</property>

<property>

<name>dfs.name.dir</name>

<value>/home/hadoop/hdfs/namenode</value>

<final>true</final>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>hadoop-master:9001</value>

</property>

</configuration>

hadoop-env.sh

Installing Hadoop on

Slave Servers

# su hadoop

$ cd /opt/hadoop

$ scp -r hadoop hadoop-slave-1:/opt/hadoop

$ scp -r hadoop hadoop-slave-2:/opt/hadoop

Configuring Master Node

$ vi etc/hadoop/masters

hadoop-master

Configuring Slave Node

$ vi etc/hadoop/slaves

hadoop-slave-1

hadoop-slave-2

Format Name Node on Hadoop Master

$hdfs namenode –format

# su hadoop

$ sudo bash

# service ufw disable

- configure the directory where hadoop will store its

data files, the network ports it listens to, etc.

- setup will use Hadoop’s Distributed File System(HDFS),

even though little “cluster” only contains single local machine.

There are 0 datanode(s) running

and no node(s) are excluded in this operation

HDFS Commands Guide

All HDFS commands are invoked by the bin/hdfs script. Running the hdfs script without any arguments prints the description for all commands.

Usage: hdfs [SHELL_OPTIONS] COMMAND [GENERIC_OPTIONS] [COMMAND_OPTIONS]

Hadoop has an option parsing framework that employs parsing generic options as well as running classes.

| COMMAND_OPTIONS | Description |

|---|---|

| --config --loglevel |

The common set of shell options. These are documented on the Commands Manual page. |

| GENERIC_OPTIONS | The common set of options supported by multiple commands. See the Hadoop Commands Manual for more information. |

| COMMAND COMMAND_OPTIONS | Various commands with their options are described in the following sections. The commands have been grouped into User Commands and Administration Commands. |

User Commands

Commands useful for users of a hadoop cluster.classpath

Usage: hdfs classpathPrints the class path needed to get the Hadoop jar and the required libraries

dfs

Usage: hdfs dfs [COMMAND [COMMAND_OPTIONS]]Run a filesystem command on the file system supported in Hadoop. The various COMMAND_OPTIONS can be found at File System Shell Guide.

fetchdt

Usage: hdfs fetchdt [--webservice <namenode_http_addr>] <path>| COMMAND_OPTION | Description |

|---|---|

| --webservice https_address | use http protocol instead of RPC |

| fileName | File name to store the token into. |

fsck

Usage: hdfs fsck <path>

[-list-corruptfileblocks |

[-move | -delete | -openforwrite]

[-files [-blocks [-locations | -racks]]]

[-includeSnapshots]

[-storagepolicies] [-blockId <blk_Id>]

| COMMAND_OPTION | Description |

|---|---|

| path | Start checking from this path. |

| -delete | Delete corrupted files. |

| -files | Print out files being checked. |

| -files -blocks | Print out the block report |

| -files -blocks -locations | Print out locations for every block. |

| -files -blocks -racks | Print out network topology for data-node locations. |

| -includeSnapshots | Include snapshot data if the given path indicates a snapshottable directory or there are snapshottable directories under it. |

| -list-corruptfileblocks | Print out list of missing blocks and files they belong to. |

| -move | Move corrupted files to /lost+found. |

| -openforwrite | Print out files opened for write. |

| -storagepolicies | Print out storage policy summary for the blocks. |

| -blockId | Print out information about the block. |

getconf

Usage:hdfs getconf -namenodes hdfs getconf -secondaryNameNodes hdfs getconf -backupNodes hdfs getconf -includeFile hdfs getconf -excludeFile hdfs getconf -nnRpcAddresses hdfs getconf -confKey [key]

| COMMAND_OPTION | Description |

|---|---|

| -namenodes | gets list of namenodes in the cluster. |

| -secondaryNameNodes | gets list of secondary namenodes in the cluster. |

| -backupNodes | gets list of backup nodes in the cluster. |

| -includeFile | gets the include file path that defines the datanodes that can join the cluster. |

| -excludeFile | gets the exclude file path that defines the datanodes that need to decommissioned. |

| -nnRpcAddresses | gets the namenode rpc addresses |

| -confKey [key] | gets a specific key from the configuration |

lsSnapshottableDir

Usage: hdfs lsSnapshottableDir [-help]| COMMAND_OPTION | Description |

|---|---|

| -help | print help |

jmxget

Usage: hdfs jmxget [-localVM ConnectorURL | -port port | -server mbeanserver | -service service]| COMMAND_OPTION | Description |

|---|---|

| -help | print help |

| -localVM ConnectorURL | connect to the VM on the same machine |

| -port mbean server port | specify mbean server port, if missing it will try to connect to MBean Server in the same VM |

| -service | specify jmx service, either DataNode or NameNode, the default |

oev

Usage: hdfs oev [OPTIONS] -i INPUT_FILE -o OUTPUT_FILERequired command line arguments:

| COMMAND_OPTION | Description |

|---|---|

| -i,--inputFile arg | edits file to process, xml (case insensitive) extension means XML format, any other filename means binary format |

| -o,--outputFile arg | Name of output file. If the specified file exists, it will be overwritten, format of the file is determined by -p option |

Optional command line arguments:

| COMMAND_OPTION | Description |

|---|---|

| -f,--fix-txids | Renumber the transaction IDs in the input, so that there are no gaps or invalid transaction IDs. |

| -h,--help | Display usage information and exit |

| -r,--ecover | When reading binary edit logs, use recovery mode. This will give you the chance to skip corrupt parts of the edit log. |

| -p,--processor arg | Select which type of processor to apply against image file, currently supported processors are: binary (native binary format that Hadoop uses), xml (default, XML format), stats (prints statistics about edits file) |

| -v,--verbose | More verbose output, prints the input and output filenames, for processors that write to a file, also output to screen. On large image files this will dramatically increase processing time (default is false). |

oiv

Usage: hdfs oiv [OPTIONS] -i INPUT_FILERequired command line arguments:

| COMMAND_OPTION | Description |

|---|---|

| -i,--inputFile arg | edits file to process, xml (case insensitive) extension means XML format, any other filename means binary format |

Optional command line arguments:

| COMMAND_OPTION | Description |

|---|---|

| -h,--help | Display usage information and exit |

| -o,--outputFile arg | Name of output file. If the specified file exists, it will be overwritten, format of the file is determined by -p option |

| -p,--processor arg | Select which type of processor to apply against image file, currently supported processors are: binary (native binary format that Hadoop uses), xml (default, XML format), stats (prints statistics about edits file) |

oiv_legacy

Usage: hdfs oiv_legacy [OPTIONS] -i INPUT_FILE -o OUTPUT_FILE| COMMAND_OPTION | Description |

|---|---|

| -h,--help | Display usage information and exit |

| -i,--inputFile arg | edits file to process, xml (case insensitive) extension means XML format, any other filename means binary format |

| -o,--outputFile arg | Name of output file. If the specified file exists, it will be overwritten, format of the file is determined by -p option |

snapshotDiff

Usage: hdfs snapshotDiff <path> <fromSnapshot> <toSnapshot>Determine the difference between HDFS snapshots. See the HDFS Snapshot Documentation for more information.

Administration Commands

Commands useful for administrators of a hadoop cluster.balancer

Usage: hdfs balancer

[-threshold <threshold>]

[-policy <policy>]

[-exclude [-f <hosts-file> | <comma-separated list of hosts>]]

[-include [-f <hosts-file> | <comma-separated list of hosts>]]

[-idleiterations <idleiterations>]

| COMMAND_OPTION | Description |

|---|---|

| -policy <policy> | datanode (default): Cluster is balanced if each datanode is balanced. blockpool: Cluster is balanced if each block pool in each datanode is balanced. |

| -threshold <threshold> | Percentage of disk capacity. This overwrites the default threshold. |

| -exclude -f <hosts-file> | <comma-separated list of hosts> | Excludes the specified datanodes from being balanced by the balancer. |

| -include -f <hosts-file> | <comma-separated list of hosts> | Includes only the specified datanodes to be balanced by the balancer. |

| -idleiterations <iterations> | Maximum number of idle iterations before exit. This overwrites the default idleiterations(5). |

Note that the blockpool policy is more strict than the datanode policy.

cacheadmin

Usage: hdfs cacheadmin -addDirective -path <path> -pool <pool-name> [-force] [-replication <replication>] [-ttl <time-to-live>]See the HDFS Cache Administration Documentation for more information.

crypto

Usage:hdfs crypto -createZone -keyName <keyName> -path <path> hdfs crypto -help <command-name> hdfs crypto -listZones

datanode

Usage: hdfs datanode [-regular | -rollback | -rollingupgrace rollback]| COMMAND_OPTION | Description |

|---|---|

| -regular | Normal datanode startup (default). |

| -rollback | Rollback the datanode to the previous version. This should be used after stopping the datanode and distributing the old hadoop version. |

| -rollingupgrade rollback | Rollback a rolling upgrade operation. |

dfsadmin

Usage: hdfs dfsadmin [GENERIC_OPTIONS]

[-report [-live] [-dead] [-decommissioning]]

[-safemode enter | leave | get | wait]

[-saveNamespace]

[-rollEdits]

[-restoreFailedStorage true |false |check]

[-refreshNodes]

[-setQuota <quota> <dirname>...<dirname>]

[-clrQuota <dirname>...<dirname>]

[-setSpaceQuota <quota> <dirname>...<dirname>]

[-clrSpaceQuota <dirname>...<dirname>]

[-setStoragePolicy <path> <policyName>]

[-getStoragePolicy <path>]

[-finalizeUpgrade]

[-rollingUpgrade [<query> |<prepare> |<finalize>]]

[-metasave filename]

[-refreshServiceAcl]

[-refreshUserToGroupsMappings]

[-refreshSuperUserGroupsConfiguration]

[-refreshCallQueue]

[-refresh <host:ipc_port> <key> [arg1..argn]]

[-reconfig <datanode |...> <host:ipc_port> <start |status>]

[-printTopology]

[-refreshNamenodes datanodehost:port]

[-deleteBlockPool datanode-host:port blockpoolId [force]]

[-setBalancerBandwidth <bandwidth in bytes per second>]

[-allowSnapshot <snapshotDir>]

[-disallowSnapshot <snapshotDir>]

[-fetchImage <local directory>]

[-shutdownDatanode <datanode_host:ipc_port> [upgrade]]

[-getDatanodeInfo <datanode_host:ipc_port>]

[-triggerBlockReport [-incremental] <datanode_host:ipc_port>]

[-help [cmd]]

| COMMAND_OPTION | Description |

|---|---|

| -report [-live] [-dead] [-decommissioning] | Reports basic filesystem information and statistics. Optional flags may be used to filter the list of displayed DataNodes. |

| -safemode enter|leave|get|wait | Safe mode maintenance command. Safe mode is a Namenode state in which it 1. does not accept changes to the name space (read-only) 2. does not replicate or delete blocks. Safe mode is entered automatically at Namenode startup, and leaves safe mode automatically when the configured minimum percentage of blocks satisfies the minimum replication condition. Safe mode can also be entered manually, but then it can only be turned off manually as well. |

| -saveNamespace | Save current namespace into storage directories and reset edits log. Requires safe mode. |

| -rollEdits | Rolls the edit log on the active NameNode. |

| -restoreFailedStorage true|false|check | This option will turn on/off automatic attempt to restore failed storage replicas. If a failed storage becomes available again the system will attempt to restore edits and/or fsimage during checkpoint. ‘check’ option will return current setting. |

| -refreshNodes | Re-read the hosts and exclude files to update the set of Datanodes that are allowed to connect to the Namenode and those that should be decommissioned or recommissioned. |

| -setQuota <quota> <dirname>…<dirname> | See HDFS Quotas Guide for the detail. |

| -clrQuota <dirname>…<dirname> | See HDFS Quotas Guide for the detail. |

| -setSpaceQuota <quota> <dirname>…<dirname> | See HDFS Quotas Guide for the detail. |

| -clrSpaceQuota <dirname>…<dirname> | See HDFS Quotas Guide for the detail. |

| -setStoragePolicy <path> <policyName> | Set a storage policy to a file or a directory. |

| -getStoragePolicy <path> | Get the storage policy of a file or a directory. |

| -finalizeUpgrade | Finalize upgrade of HDFS. Datanodes delete their previous version working directories, followed by Namenode doing the same. This completes the upgrade process. |

| -rollingUpgrade [<query>|<prepare>|<finalize>] | See Rolling Upgrade document for the detail. |

| -metasave filename | Save Namenode’s primary data structures to filename in the directory specified by hadoop.log.dir property. filename is overwritten if it exists. filename will contain one line for each of the following 1. Datanodes heart beating with Namenode 2. Blocks waiting to be replicated 3. Blocks currently being replicated 4. Blocks waiting to be deleted |

| -refreshServiceAcl | Reload the service-level authorization policy file. |

| -refreshUserToGroupsMappings | Refresh user-to-groups mappings. |

| -refreshSuperUserGroupsConfiguration | Refresh superuser proxy groups mappings |

| -refreshCallQueue | Reload the call queue from config. |

| -refresh <host:ipc_port> <key> [arg1..argn] | Triggers a runtime-refresh of the resource specified by <key> on <host:ipc_port>. All other args after are sent to the host. |

| -reconfig <datanode |…> <host:ipc_port> <start|status> | Start reconfiguration or get the status of an ongoing reconfiguration. The second parameter specifies the node type. Currently, only reloading DataNode’s configuration is supported. |

| -printTopology | Print a tree of the racks and their nodes as reported by the Namenode |

| -refreshNamenodes datanodehost:port | For the given datanode, reloads the configuration files, stops serving the removed block-pools and starts serving new block-pools. |

| -deleteBlockPool datanode-host:port blockpoolId [force] | If force is passed, block pool directory for the given blockpool id on the given datanode is deleted along with its contents, otherwise the directory is deleted only if it is empty. The command will fail if datanode is still serving the block pool. Refer to refreshNamenodes to shutdown a block pool service on a datanode. |

| -setBalancerBandwidth <bandwidth in bytes per second> | Changes the network bandwidth used by each datanode during HDFS block balancing. <bandwidth> is the maximum number of bytes per second that will be used by each datanode. This value overrides the dfs.balance.bandwidthPerSec parameter. NOTE: The new value is not persistent on the DataNode. |

| -allowSnapshot <snapshotDir> | Allowing snapshots of a directory to be created. If the operation completes successfully, the directory becomes snapshottable. See the HDFS Snapshot Documentation for more information. |

| -disallowSnapshot <snapshotDir> | Disallowing snapshots of a directory to be created. All snapshots of the directory must be deleted before disallowing snapshots. See the HDFS Snapshot Documentation for more information. |

| -fetchImage <local directory> | Downloads the most recent fsimage from the NameNode and saves it in the specified local directory. |

| -shutdownDatanode <datanode_host:ipc_port> [upgrade] | Submit a shutdown request for the given datanode. See Rolling Upgrade document for the detail. |

| -getDatanodeInfo <datanode_host:ipc_port> | Get the information about the given datanode. See Rolling Upgrade document for the detail. |

| -triggerBlockReport [-incremental] <datanode_host:ipc_port> | Trigger a block report for the given datanode. If ‘incremental’ is specified, it will be otherwise, it will be a full block report. |

| -help [cmd] | Displays help for the given command or all commands if none is specified. |

haadmin

Usage: hdfs haadmin -checkHealth <serviceId>

hdfs haadmin -failover [--forcefence] [--forceactive] <serviceId> <serviceId>

hdfs haadmin -getServiceState <serviceId>

hdfs haadmin -help <command>

hdfs haadmin -transitionToActive <serviceId> [--forceactive]

hdfs haadmin -transitionToStandby <serviceId>

| COMMAND_OPTION | Description |

|---|---|

| -checkHealth | check the health of the given NameNode |

| -failover | initiate a failover between two NameNodes |

| -getServiceState | determine whether the given NameNode is Active or Standby |

| -transitionToActive | transition the state of the given NameNode to Active (Warning: No fencing is done) |

| -transitionToStandby | transition the state of the given NameNode to Standby (Warning: No fencing is done) |

journalnode

Usage: hdfs journalnodeThis comamnd starts a journalnode for use with HDFS HA with QJM.

mover

Usage: hdfs mover [-p <files/dirs> | -f <local file name>]| COMMAND_OPTION | Description |

|---|---|

| -f <local file> | Specify a local file containing a list of HDFS files/dirs to migrate. |

| -p <files/dirs> | Specify a space separated list of HDFS files/dirs to migrate. |

Note that, when both -p and -f options are omitted, the default path is the root directory.

namenode

Usage: hdfs namenode [-backup] |

[-checkpoint] |

[-format [-clusterid cid ] [-force] [-nonInteractive] ] |

[-upgrade [-clusterid cid] [-renameReserved<k-v pairs>] ] |

[-upgradeOnly [-clusterid cid] [-renameReserved<k-v pairs>] ] |

[-rollback] |

[-rollingUpgrade <downgrade |rollback> ] |

[-finalize] |

[-importCheckpoint] |

[-initializeSharedEdits] |

[-bootstrapStandby] |

[-recover [-force] ] |

[-metadataVersion ]

| COMMAND_OPTION | Description |

|---|---|

| -backup | Start backup node. |

| -checkpoint | Start checkpoint node. |

| -format [-clusterid cid] [-force] [-nonInteractive] | Formats the specified NameNode. It starts the NameNode, formats it and then shut it down. -force option formats if the name directory exists. -nonInteractive option aborts if the name directory exists, unless -force option is specified. |

| -upgrade [-clusterid cid] [-renameReserved <k-v pairs>] | Namenode should be started with upgrade option after the distribution of new Hadoop version. |

| -upgradeOnly [-clusterid cid] [-renameReserved <k-v pairs>] | Upgrade the specified NameNode and then shutdown it. |

| -rollback | Rollback the NameNode to the previous version. This should be used after stopping the cluster and distributing the old Hadoop version. |

| -rollingUpgrade <downgrade|rollback|started> | See Rolling Upgrade document for the detail. |

| -finalize | Finalize will remove the previous state of the files system. Recent upgrade will become permanent. Rollback option will not be available anymore. After finalization it shuts the NameNode down. |

| -importCheckpoint | Loads image from a checkpoint directory and save it into the current one. Checkpoint dir is read from property fs.checkpoint.dir |

| -initializeSharedEdits | Format a new shared edits dir and copy in enough edit log segments so that the standby NameNode can start up. |

| -bootstrapStandby | Allows the standby NameNode’s storage directories to be bootstrapped by copying the latest namespace snapshot from the active NameNode. This is used when first configuring an HA cluster. |

| -recover [-force] | Recover lost metadata on a corrupt filesystem. See HDFS User Guide for the detail. |

| -metadataVersion | Verify that configured directories exist, then print the metadata versions of the software and the image. |

secondarynamenode

Usage: hdfs secondarynamenode [-checkpoint [force]] | [-format] | [-geteditsize]| COMMAND_OPTION | Description |

|---|---|

| -checkpoint [force] | Checkpoints the SecondaryNameNode if EditLog size >= fs.checkpoint.size. If force is used, checkpoint irrespective of EditLog size. |

| -format | Format the local storage during startup. |

| -geteditsize | Prints the number of uncheckpointed transactions on the NameNode. |

storagepolicies

Usage: hdfs storagepoliciesLists out all storage policies. See the HDFS Storage Policy Documentation for more information.

zkfc

Usage: hdfs zkfc [-formatZK [-force] [-nonInteractive]]| COMMAND_OPTION | Description |

|---|---|

| -formatZK | Format the Zookeeper instance |

| -h | Display help |

Debug Commands

Useful commands to help administrators debug HDFS issues, like validating block files and calling recoverLease.verify

Usage: hdfs debug verify [-meta <metadata-file>] [-block <block-file>]| COMMAND_OPTION | Description |

|---|---|

| -block block-file | Optional parameter to specify the absolute path for the block file on the local file system of the data node. |

| -meta metadata-file | Absolute path for the metadata file on the local file system of the data node. |

recoverLease

Usage: hdfs debug recoverLease [-path <path>] [-retries <num-retries>]| COMMAND_OPTION | Description |

|---|---|

| [-path path] | HDFS path for which to recover the lease. |

| [-retries num-retries] | Number of times the client will retry calling recoverLease. The default number of retries is 1. |

FileInputFormat: Base class for all file-based InputFormatsTextInputFormat- each line will be treated as valueKeyValueTextInputFormat- First value before delimiter is key and rest is valueFixedLengthInputFormat- Each fixed length value is considered to be valueNLineInputFormat- N number of lines is considered one value/recordSequenceFileInputFormat- For binary

DBInputFormat to read from databasesInputFormat and OutputFormat classes. Here is an example configuration for a simple MapReduce job that reads and writes to text files:

Job job = new Job(getConf());

...

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class); https://hadoop.apache.org/docs/current/api/org/apache/hadoop/mapreduce/class-use/InputFormat.html JAVA Code For Writing A File To HDFS

import java.io.FileInputStream;

import java.io.InputStream;

import java.io.OutputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class HdfsWriter extends Configured implements Tool { public int run(String[] args) throws Exception {

//String localInputPath = args[0];

//Path outputPath = new Path(args[0]);// ARGUMENT FOR OUTPUT_LOCATION

Path outputPath = new Path("/o1");// ARGUMENT FOR OUTPUT_LOCATION

Configuration conf = getConf();//get configruation ofhadoop system

System.out.println("Connecting to -- "+conf.get("fs.defaultFS"));

FileSystem fs = FileSystem.get(conf);//Destination file in hdfs

if (fs.exists(outputPath))

{

fs.delete(outputPath, true);

}

OutputStream os = fs.create(outputPath);

InputStream is = new BufferedInputStream(new FileInputStream("/home/username/a.txt"));//Data set is getting copied into input stream through buffer mechanism.

IOUtils.copyBytes(is, os, conf); // Copying the dataset from input stream to output stream

System.out.println(outputPath + " copied to HDFS");

return 0;

}

public static void main(String[] args) throws Exception

{

int returnCode = ToolRunner.run(new HdfsWriter(), args);

System.exit(returnCode);

}

}

for tutorial:

Errors

Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app.MRAppMaster

Please check whether your etc/hadoop/mapred-site.xml contains the below configuration:

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

<property>

<name>mapreduce.reduce.e nv</name>

<value>HADOOP_MAPRED_HOME=${full path of your hadoop distribution directory}</value>

</property>

[2017-12-01 12:55:58.279]mapred-site.xml as below<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

</configuration>stop-all.sh

start-all.shCurtesy:https://stackoverflow.com/questions/47599789/hadoop- pagerank-error-when-running/ 55350372#55350372

Useful Links:

How to create and run Eclipse Project with a MapReduce Sample

Steps

Open Eclipse and create new Java Project

File -> New -> Java Project

Click Next and then Finish

Right click on WordCount Project under Package Explorer and go to properties in Eclipse IDE..

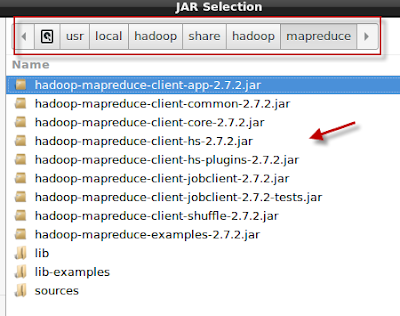

Select all jar files from below folders and add them to project. Below paths are based on my installation of hadoop. This may change depending on where you installed hadoop. My hadoop installation directory is /usr/local/hadoop. You should change this path according to your installation.

/usr/local/hadoop/share/Hadoop/mapreduce

/usr/local/hadoop/share/hadoop/common/lib

Hadoop WordCount Example

Right click on Project and Add new Class as WordCount.java

Copy Paste below code in this .java file. This code snippet is taken from official Apache Hadoop website. You should probably get latest code from this link instead of copying from here. There may be new version there.

----------------WordCount.java Start--------------------

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

----------------WordCount.java End--------------------

Make sure there are no errors in code after paste. Then Right click on Project and Export JAR file.

Select Jar file option under Java.

Click Next. Uncheck all other resources. Then provide path for exporting .jar file. It could be any path but remember where you exported it. You need this path for running jar file later.

Keep options selected as below.

Click Next and Finish.

Once exported, it is time to run JAR file. But before that, create input folder on HDFS that will hold one or more text files for counting words.

hadoop fs -mkdir input

Create a text file with some text and copy it in Input folder. You can create multiple text files if you wish.

vi wordcounttest.txt

Add some sample test in this file and save it. Under Ubuntu you can also use nano editor which is easier than vieditor.

hadoop fs -put wordcounttest.txt input/wordcounttest.txt

Make sure output directory do not exist yet. If it already exists then remove it with below command, otherwise JAR will throw an error that directory already exist.

hadoop fs -rm -r output

Now we can run JAR file with command as below. Please note you may need to adjust path of jar file depending on where you exported it.

hadoop jar /home/student/workspace/WordCount/WordCount.jar WordCount input/ output/

Please note that this program will read all text files from input folder on HDFS and count total number of words in each file. Output is stored under output folder on HDFS.

Once run is successful, you should see below 2 files in output folder.

hadoop fs -ls output

-rw-r--r-- 1 hduser supergroup 0 2016-07-15 13:54 output/_SUCCESS

-rw-r--r-- 1 hduser supergroup 73 2016-07-15 13:54 output/part-r-00000

_SUCCESS file is an empty file indicating RUN was successful. To see output of run, view part-r-00000 file.

hdfs fs -cat output/part-r-00000

Sample Output

hi 2

is 2

test 1

Curtesy:

http://www.shabdar.org/hadoop-java/138-how-to-create-and-run-eclipse-project-with-a-mapreduce-sample.html

Combiners and Practitioners in Word Count

/* * To change this template, choose Tools | Templates * and open the template in the editor. */ import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.*; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.util.GenericOptionsParser; /** * * @author sapna */ public class WordCount { public static class Map extends Mapper<LongWritable, Text, Text, IntWritable> { private final static IntWritable one = new IntWritable(1); // type of output value public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { StringTokenizer itr = new StringTokenizer(value.toString()," ,().?'`\"!@#$%^&*:;<>/"); // line to string token Text word = new Text(); // type of output key while (itr.hasMoreTokens()) { word.set(itr.nextToken()); // set word as each input keyword context.write(word, one); // create a pair <keyword, 1> } } } public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable> { public void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException { IntWritable result = new IntWritable(); int sum = 0; // initialize the sum for each keyword for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); // create a pair <keyword, number of occurences> } } //Partitioner //if string length 1 it will go to first reducer and 2 to second reducer and so on... public static class MyCustomPartitioner extends Partitioner<Text, IntWritable> { public int getPartition(Text key, IntWritable value, int numOfReducers) { String str = key.toString(); /*if(str.charAt(0) == 's'){ return 0; } if(str.charAt(0) == 'k'){ return 1%numOfReducers; } if(str.charAt(0) == 'c'){ return 2%numOfReducers; } else{ return 3%numOfReducers; }*/ if(str.length()==1) { return 0; } if(str.length()==2) { return 1; } if(str.length()==3) { return 2; } else { return 3; } } } '' // Driver program public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); // get all args if (otherArgs.length != 2) { System.err.println("Usage: WordCount <in> <out>"); System.exit(2); } // create a job with name "wordcount" //Job job = new Job(conf, "wordcount"); Job job = Job.getInstance(conf, "word count"); job.setJarByClass(WordCount.class); job.setMapperClass(Map.class); //uncomment the following lines to add the Partitioner //job.setPartitionerClass(MyCustomPartitioner.class); //Partitioner class //job.setNumReduceTasks(5); //Setting no of reducers job.setReducerClass(Reduce.class); // uncomment the following line to add the Combiner // job.setCombinerClass(Reduce.class); // set output key type job.setOutputKeyClass(Text.class); // set output value type job.setOutputValueClass(IntWritable.class); //set the HDFS path of the input data FileInputFormat.addInputPath(job, new Path(otherArgs[0])); // set the HDFS path for the output FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); //Wait till job completion System.exit(job.waitForCompletion(true) ? 0 : 1); }//end of main method }//end of class word count

Difference between combiner and partitioner

Combiner can be viewed as mini-reducers in the map phase. They perform a local-reduce on the mapper results before they are distributed further. Once the Combiner functionality is executed, it is then passed on to the Reducer for further work.

where as

Partitionercome into the picture when we are working on more than one Reducer. So, the partitioner decide which reducer is responsible for a particular key. They basically take theMapperResult(ifCombineris used thenCombinerResult) and send it to the responsible Reducer based on the keyWith Combiner and Partitioner scenario :

With Partitioner only scenario :

Examples :

Partitioner Example :

The partitioning phase takes place after the map phase and before the reduce phase. The number of partitions is equal to the number of reducers. The data gets partitioned across the reducers according to the partitioning function . The difference between a partitioner and a combiner is that the partitioner divides the data according to the number of reducers so that all the data in a single partition gets executed by a single reducer. However, the combiner functions similar to the reducer and processes the data in each partition. The combiner is an optimization to the reducer. The default partitioning function is the hash partitioning function where the hashing is done on the key. However it might be useful to partition the data according to some other function of the key or the value. -- Source

Without Combiner.

"hello hello there"=> mapper1 =>(hello, 1), (hello,1), (there,1)

"howdy howdy again"=> mapper2 =>(howdy, 1), (howdy,1), (again,1)Both outputs get to the reducer =>

(again, 1), (hello, 2), (howdy, 2), (there, 1)Using the Reducer as the Combiner

"hello hello there"=> mapper1 with combiner =>(hello, 2), (there,1)

"howdy howdy again"=> mapper2 with combiner =>(howdy, 2), (again,1)Both outputs get to the reducer =>

(again, 1), (hello, 2), (howdy, 2), (there, 1)Conclusion

The end result is the same, but when using a combiner, the map output is reduced already. In this example you only send 2 output pairs instead of 3 pairs to the reducer. So you gain IO/disk performance. This is useful when aggregating values.

The Combiner is actually a Reducer applied to the map() outputs.

If you take a look at the very first Apache MapReduce tutorial, which happens to be exactly the mapreduce example I just illustrated, you can see they use the reducer as the combiner :

job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class);Curtesy: https://stackoverflow.com/questions/38562889/difference-between-combiner-and-partitionerHADOOP VERSION 3 INSTALLATION STEPSInstalling JAVA

Download JAVA from

http://www.oracle.com/technetwork/java/javase/downloads/index-jsp-138363.html

Download HADOOP from

http://archive.apache.org/dist/hadoop/core/

copy both java and hadoop to /usr/local$ sudo bash

password:

# mv jdk1.8.0 /usr/local/java

# exit

#mv hadoop-3.2.3 /usr/local/ hadoop

Change the ownership

sudo chown -R hadoop:hadoop hadoop

sudo chown -R hadoop:hadoop java

setting java and haoop path

~$gedit ~/.bashrcAdd the below code to end of theat fileexport JAVA_HOME=/usr/local/java

export PATH=$PATH:/usr/local/java/bin

export PATH=$PATH:/usr/local/java/sbin

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

export HADOOP_STREAMING=$HADOOP_HOME/share/hadoop/tools/lib/hadoop-streaming-3.2.3.jar

export HADOOP_LOG_DIR=$HADOOP_HOME/logs

export PDSH_RCMD_TYPE=ssh

Installing Software

$sudo apt-get updateIf your cluster doesn’t have the requisite software you will need to install it.

For example on Ubuntu Linux:$ sudo apt-get install sshsetup passphraseless ssh

Now check that you can ssh to the localhost without a passphrase:

$ ssh localhost

If you cannot ssh to localhost without a passphrase, execute the following commands:

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ chmod 0600 ~/.ssh/authorized_keysCore-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

Hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hdfs/datanode</value>

</property>

</configuration>

Yarn-site,xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREP END_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration>

Mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name> <value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>

https://codewitharjun.medium.com/install-hadoop-on-ubuntu-operating-system-6e0ca4ef9689

https://sites.google.com/site/sraochintalapudi/big-data-analytics/hadoop-mapreduce-programs

Verifying Hadoop Installation

Step 1: Name Node Setup

$ cd ~

$ hdfs namenode -format

Step 2: Verifying Hadoop dfs

$ start-dfs.sh

Step 3: Verifying Yarn Script

$ start-yarn.sh

Step 4: Accessing Hadoop on BrowserOn Hadoop Web UI, There are three web user interfaces to be used:

Name node web page: http://localhost:9870/dfshealth.html

Data node web page: http://localhost:9864/datanode.html

Yarn web page: http://localhost:8088/cluster

Comments

Post a Comment